Copy link

Cognitive Errors in Anesthesiology

Last updated: 01/04/2023

Key Points

- Cognitive errors are thought-process errors, usually linked to failed biases or heuristics.

- Cognitive errors occur when subconscious processes and mental shortcuts are relied upon excessively or used under the wrong circumstances.

Introduction

- The study of how we make decisions, particularly under stressful situations, has received much attention in the safety literature. Within the last ten years, the study of how we make clinical decisions as anesthesiologists has begun to receive increasing attention.

- Cognitive errors are thought-process errors, usually linked to failed biases or heuristics.1,2

- Biases are systematic preferences to exclude certain perspectives on decision possibilities.2 They result from subconscious influences from life experiences and individual preferences.

- Heuristics are cognitive shortcuts used by humans to reduce the cognitive costs of decision-making.2

- While both heuristics and biases are frequently used in medicine to make quick clinical decisions, cognitive errors occur when these subconscious processes and mental shortcuts are relied upon excessively or used under the wrong circumstances.1 It is important to note that cognitive errors are not knowledge gaps.

Clinical Decision-Making

- Clinical decision-making is a complex process, and the quality of our clinical decisions depends on several factors.3

- Individual factors: general affective state, personality, intelligence, fatigue, cognitive load, sleep deprivation, distractions, etc.

- Ambient conditions in the immediate environment: context, team factors, patient factors, resource limitations, ergonomic factors, etc.

- Several models of decision-making have been proposed. The dual process theory will be discussed here. According to the dual-process theory, human cognitive tasks are processed by two systems that operate in parallel.3,4

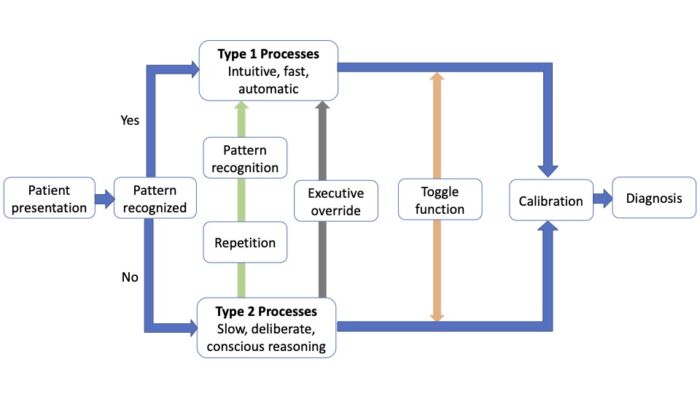

- Type 1 processing is intuitive, fast, automatic, and relies largely on pattern recognition. We spend up to 95% of our time in this intuitive mode, which is characterized by heuristics or mental shortcuts (“seen this multiple times before”). The major advantage is that its effortless and very often accurate.3,4

- Type 2 processing is slow, analytical, effortful, and deliberative and involves conscious reasoning. The major advantage is that it can handle complex, novel problems. However, since it is slow and effortful, it is usually unsuitable for time-sensitive tasks.3,4

- Clinical decision-making usually involves a blend of intuitive and analytical processing in varying degrees (Figure 1). Our brains generally default to type 1 processing whenever possible. We often toggle back and forth between the two systems. Repetitive processing of a skill using type 2 processes allows type 1 processing, which is the basis of skill acquisition. This transition from type 2 to type 1 processing of cognitive tasks is particularly relevant to less experienced clinical decision makers.

Figure 1. Dual process model for clinical decision-making. Adapted from Croskerry P et al. BMJ Qual Saf. 2013.1 CC BY NC 3.0.

- While biases can occur in both processes, most biases are associated with heuristics and type 1 intuitive processes. Biases in type 1 affecting unconscious or automatic responses are termed implicit bias, while biases in type 2 affecting conscious attitudes and beliefs are termed explicit bias.4

- There are two main sources of biases: innate, hard-wired biases from our evolutionary past and acquired biases that we develop in our working environments (social/cultural habits, hidden curriculum, etc.).3

- Certain high-risk situations, such as fatigue, sleep deprivation, and cognitive overload, predispose clinicians to use type 1 processes for decision-making.3

Common Cognitive Errors in Anesthesia

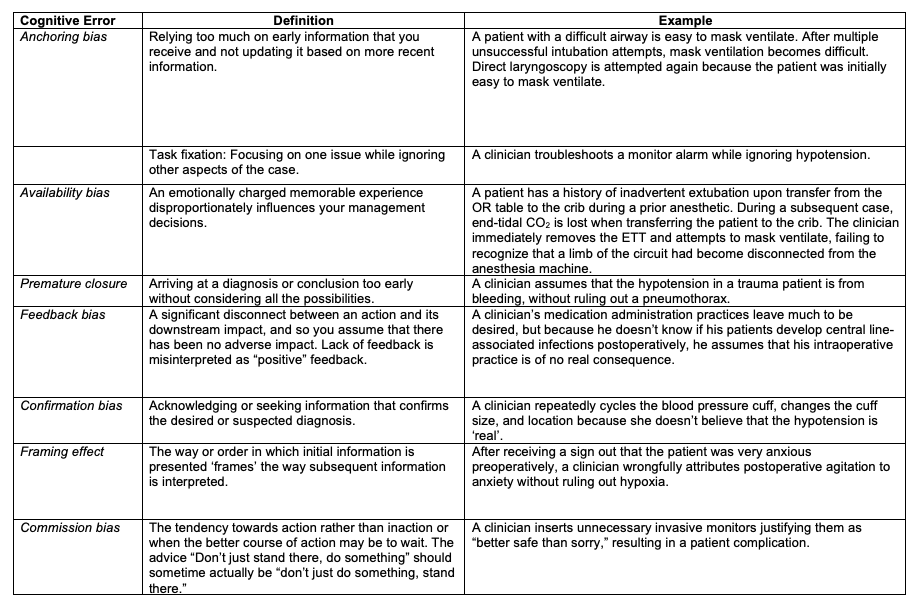

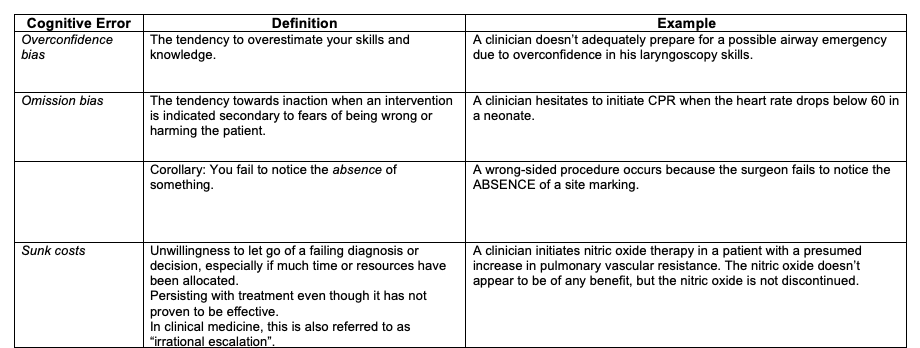

- Cognitive errors have been described in many other specialties and are thought to contribute to patient harm and misdiagnoses far more than technical errors. More than two-thirds of missed or delayed diagnoses are caused by cognitive errors. More than 100 types of cognitive errors affecting clinical decision-making have been described, many of which may contribute to adverse patient outcomes.3

Table 1, Part 1. Common cognitive errors in anesthesiology. Adapted from Stiegler MP et al. Br J Anaesth. 2012 1 and Webster CS et al. BJA Education. 2021.4

Table 1, Part 2. Common cognitive errors in anesthesiology. Adapted from Stiegler MP et al. Br J Anaesth. 2012 1 and Webster CS et al. BJA Education. 2021.4

Reducing Cognitive Errors

- Many of us are not aware of our biases and their effects on decision-making. Psychological defense mechanisms prevent us from examining our thinking, motivations, and desires.

- The key to debiasing is first to be aware of our own biases and be motivated to correct them. The next critical step involves deliberate decoupling from type 1 intuitive processing and moving to type 2 analytical processing.

- Several strategies for improving self-awareness and reducing cognitive errors have been proposed:

Educational Strategies4,5

- Educational curricula covering theories of decision-making, cognitive and affective biases, and their applications.

- Simulation scenarios with cognitive error traps.

- Teaching specific skills to mitigate biases.

Workplace Strategies4,5

- Getting more information about a case before making a diagnosis.

- Metacognition describes the process of reflecting on one’s own thought process and decision-making behavior.2 The ability to step back and observe your thinking may help.

- Slowing down strategies by making a conscious effort to slow down to avoid premature closure.

- Mindfulness techniques help focus attention and reduce diagnostic errors.

- Second opinion or “fresh set of eyes” of a trusted colleague during a challenging case.

- Improving feedback on clinical decisions to reduce feedback bias.

- Avoid cognitive overload, fatigue, and sleep deprivation.

- Ready availability of protocols and clinical guidelines to reduce variance.

- Incorporating checklists, cognitive aids, and clinical decision support tools into electronic medical records.

Cognitive Forcing Functions4,5

Cognitive forcing functions are rules that require the clinician to internalize and deliberately consider alternative options. Some examples include:

- Checklists such as surgical safety checklists or central line insertion checklists.

- Rule out the worst-case scenario is a simple strategy to avoid missing important diagnoses.

- Standing rules- a given diagnosis cannot be made unless other must-not-miss diagnoses have been ruled out.

- Prospective hindsight: clinician imagines a future in which his or her decision is wrong and then answers the question, “What did I miss?”

- Stopping rules: when enough information has been gathered to make an optimal decision.

- While all of us are prone to develop cognitive “short cuts” to speed and simplify decision-making, it is important to appreciate the capacity for error. A diagnosis is often merely a hypothesis, and we must take care to consider additional possibilities even as we actively manage its most immediate effects. Design of tools and processes to decrease cognitive load are particularly helpful within the clinical practice of anesthesiology as clinical situations are quite dynamic and often urgent. The impacts of time pressure, workload, task complexity and other performance-shaping factors on our ability to make important decisions quickly should not be underestimated.

References

- Steigler MP, Neelankavil JP, Canales C, et al. Cognitive errors detected in anaesthesiology: a literature review and pilot study. Br J Anaesth. 2012;108(2): 229-35. PubMed

- Steigler MP, Tung A. Cognitive processes in anesthesiology decision making. Anesthesiology. 2014; 120:204-17. PubMed

- Croskerry P, Singhal G, Mamede S. Cognitive debiasing 1: origins of bias and theory of debiasing. BMJ Qual Saf. 2013;22: ii58-64. PubMed

- Webster CS, Taylor S, Weller JM. Cognitive biases in diagnosis and decision-making during anaesthesia and intensive care. BJA Education. 2021;21(11): 420-25. PubMed

- Croskerry P, Singhal G, Mamede S. Cognitive debiasing 2: impediments to and strategies for change. BMJ Qual Saf. 2013;22: ii65-72. PubMed

Copyright Information

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.